Preparing for ICML 2024: Main themes

Preparing for ICML 2024: Main themes

I did not have much time to prepare this year as quite busy with various things; And the conference is already next week! So be it.

Before jumping into the cluster-based “main themes”, I just highlight a couple of interesting papers I had time to read over the past couple of week-ends:

A unified framework for Large Language Model (LLM) agents using computational graphs. It introduces automatic graph optimizers for refining node-level prompts and improving agent orchestration. By representing agents as graphs, the framework facilitates the integration and enhancement of various LLM agents through node and edge optimization. Experimental results show the effectiveness of this approach in developing and automatically improving LLM agents. The framework aims to streamline and enhance the performance of language agents in various applications.

Critically examines the use of Data Shapley for data selection, highlighting its inconsistent performance across various settings. The authors propose a hypothesis testing framework showing that without specific utility function constraints, Data Shapley may perform no better than random selection. They identify a class of utility functions where Data Shapley excels and propose a heuristic for predicting its effectiveness.

Presents a universal transformer model for time series forecasting, aiming to handle diverse datasets with varying characteristics. The authors introduce a unified training framework that enhances model adaptability and performance across different types of time series data.

SAMformer, a model designed to enhance the performance of transformers in time series forecasting. SAMformer incorporates Sharpness-Aware Minimization (SAM) to navigate the loss landscape more effectively, preventing the model from falling into suboptimal local minima. Additionally, it employs channel-wise attention to improve focus on relevant features.

Introduces a framework that uses selective retrieval-augmented generation (RAG) to improve code completion tasks. Repoformer evaluates whether retrieval will enhance performance before retrieving context, improving efficiency and accuracy. It employs self-supervised learning for robust code completion and self-evaluation.

Introduces a novel method for assessing the task-specific accuracy of Retrieval-Augmented Large Language Models (RAG). This evaluation is performed through automatically generated synthetic exams composed of multiple-choice questions derived from the task’s document corpus. The approach leverages Item Response Theory (IRT) to estimate the quality and informativeness of the exams, iteratively refining them by eliminating less informative questions. The method demonstrates its effectiveness across various open-ended question-answering tasks, providing insights into factors impacting RAG performance, such as retrieval algorithms and model size.

Chain of Code: Reasoning with a Language Model-Augmented Code Emulator

A framework that enhances language model (LM) reasoning by combining code execution and LM-based code emulation. CoC generates code or pseudocode for problem-solving and uses an LM to simulate execution when code can’t be run by an interpreter. This approach outperforms traditional methods like Chain of Thought in complex reasoning tasks.

Now, let’s turn to the (basic) analysis of the “main themes”.

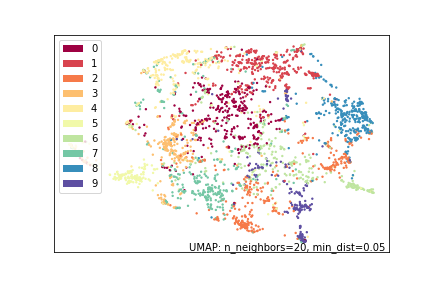

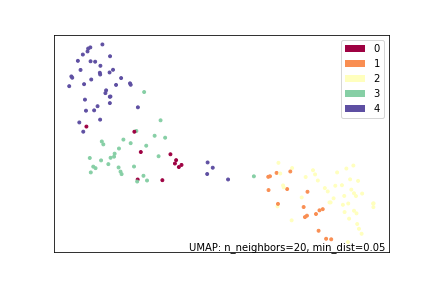

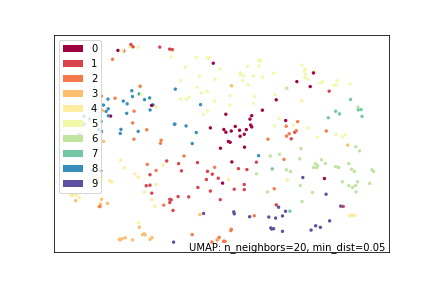

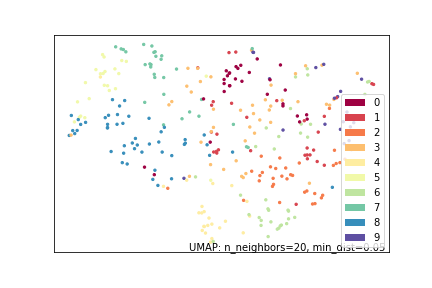

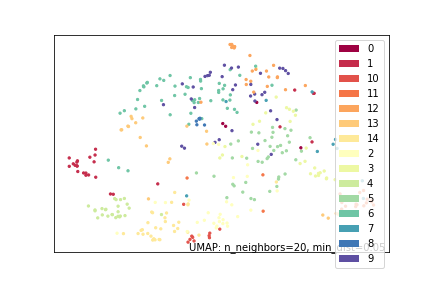

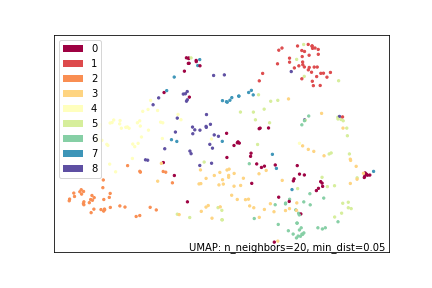

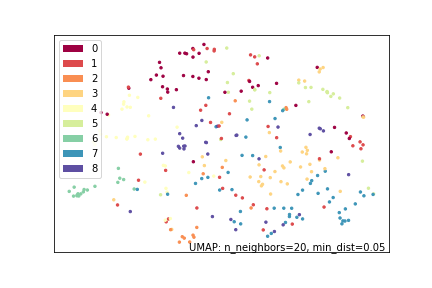

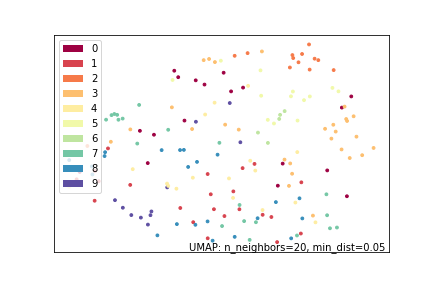

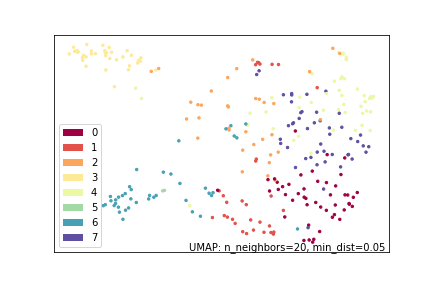

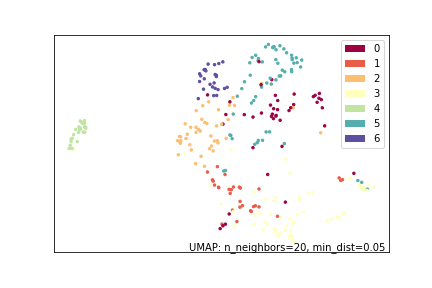

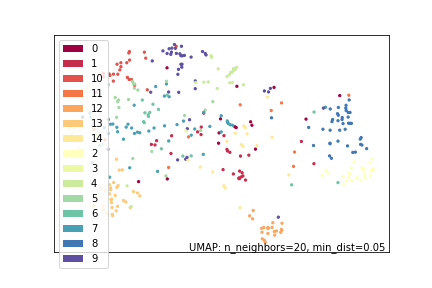

In anticipation of ICML 2024, I have downloaded the titles and abstracts of the accepted papers and conducted some basic DS/NLP analysis, including embeddings, UMAP, and prompting to uncover the main themes, and also quickly identify the papers of interest (to me).

Below are 10 clusters (themselves split into sub-clusters) along with links to the papers I plan to read carefully. If there is any associated GitHub code available, I may also experiment with it.

ICML 2024 Abstracts:

Cluster id: 0

Federated and Differentially Private Learning on Heterogeneous Data

Federated Learning is decentralized learning where one sends the model (or parts of it) to several devices so it trains locally on their data, and one gets back model parameters or gradients to update the overall model. Doing so, it helps preserves privacy, data security. It also allows for greater personalization of the model predictions by fine-tuning on a given user’s device. It can also be a way to efficiently train models by parallelizing the training process across very many machines.

Potential application: Could be used in retail banking to train a ‘global’ fraud detection model across many banks and geographies without the need to share customers’ transactions data.

Differential Privacy is a framework to ensure that the output of a model does not reveal too much information about any single data point on which the model was trained. In applications where personal information of individuals is a concern, it can be used to ensure that their data won’t be leaked by the model.

Potential application for forecasting: Differential Privacy controlled noise addition mechanism can make the model more robust (regularization).

1. Federated Learning Enhancements and Efficiency

- “Accelerating Heterogeneous Federated Learning with Closed-form Classifiers”

- “A Federated Stochastic Multi-level Compositional Minimax Algorithm for Deep AUC Maximization”

- “FedMBridge: Bridgeable Multimodal Federated Learning”

- “Achieving Lossless Gradient Sparsification via Mapping to Alternative Space in Federated Learning”

- “Balancing Similarity and Complementarity for Federated Learning”

- “AegisFL: Efficient and Flexible Privacy-Preserving Byzantine-Robust Cross-silo Federated Learning”

- “FedLMT: Tackling System Heterogeneity of Federated Learning via Low-Rank Model Training with Theoretical Guarantees”

- “Recurrent Early Exits for Federated Learning with Heterogeneous Clients”

- “Federated Full-Parameter Tuning of Billion-Sized Language Models with Communication Cost under 18 Kilobytes”

- “FedTYPE: Bridging Model Heterogeneity in Federated Learning via Uncertainty-based Asymmetrical Reciprocity Learning”

- “Overcoming Data and Model heterogeneities in Decentralized Federated Learning via Synthetic Anchors”

- “FedREDefense: Defending against Model Poisoning Attacks for Federated Learning using Model Update Reconstruction Error”

- “FedBPT: Efficient Federated Black-box Prompt Tuning for Large Language Models”

- “FedCal: Achieving Local and Global Calibration in Federated Learning via Aggregated Parameterized Scaler”

- “Locally Estimated Global Perturbations are Better than Local Perturbations for Federated Sharpness-aware Minimization”

- “SignSGD with Federated Defense: Harnessing Adversarial Attacks through Gradient Sign Decoding”

- “Federated Continual Learning via Prompt-based Dual Knowledge Transfer”

- “FEDU: Federated Unsupervised Data Augmentation for Improving Generalization”

- “Federated Optimization with Doubly Regularized Drift Correction”

- “Federated Learning with Dynamic Scheduling and Balancing Timing Constraints”

- “Profiling: Efficient Optimization of Neural Networks with Federated Learning”

- “Harmonizing Generalization and Personalization in Federated Prompt Learning”

- “FedSC: Provable Federated Self-supervised Learning with Spectral Contrastive Objective over Non-i.i.d. Data”

- “Self-Driven Entropy Aggregation for Byzantine-Robust Heterogeneous Federated Learning”

2. Differential Privacy and Privacy-Preserving Machine Learning

- “Position: Considerations for Differentially Private Learning with Large-Scale Public Pretraining”

- “Privacy-Preserving Data Release Leveraging Optimal Transport and Particle Gradient Descent”

- “Differentially Private Representation Learning via Image Captioning”

- “Dynamic Byzantine-Robust Learning: Adapting to Switching Byzantine Workers”

- “Privacy-Preserving Embedding via Look-up Table Evaluation with Fully Homomorphic Encryption”

- “Privacy Profiles for Private Selection”

- “A New Theoretical Perspective on Data Heterogeneity in Federated Averaging”

- “Rethinking DP-SGD in Discrete Domain: Exploring Logistic Distribution in the Realm of signSGD”

- “Better Locally Private Sparse Estimation Given Multiple Samples Per User”

- “Neural Collapse meets Differential Privacy: Curious behaviors of NoisyGD with Near-Perfect Representation Learning”

- “Proactive DP: A Multiple Target Optimization Framework for DP-SGD”

- “Split-and-Denoise: Protect large language model inference with local differential privacy”

- “Local Differentially Private Decentralized Stochastic Bilevel Optimization with Guaranteed Convergence Accuracy”

- “Provable Privacy with Non-Private Pre-Processing”

- “Differentially Private Bias-Term Fine-tuning of Foundation Models”

- “Achieving Lossless Gradient Sparsification via Mapping to Alternative Space in Federated Learning”

- “Making old things new: a unified algorithm for differentially private clustering”

- “PID: Prompt-Independent Data Protection Against Latent Diffusion Models”

- “Private and Federated Stochastic Convex Optimization: Efficient Strategies for Centralized Systems”

- “Tuning-free Estimation and Inference of Cumulative Distribution Function under Local Differential Privacy”

- “Identifying Optimal Privacy Parameters for the Best Accuracy under $(\epsilon,\delta)$-DP”

- “Differentially Private Domain Adaptation with Theoretical Guarantees”

- “DPZero: Private Fine-Tuning of Language Models without Backpropagation”

3. Membership Inference Attacks and Model Robustness

- “Mitigating Privacy Risk in Membership Inference by Convex-Concave Loss”

- “How Private are DP-SGD Implementations?”

- “Verifying Machine Unlearning is Fragile”

- “Recovering Labels from Local Updates in Federated Learning”

- “Low-Cost High-Power Membership Inference Attacks”

- “Understanding Robustness in Pipeline-Parallelism-Based Decentralized Training”

- “Privacy Backdoors: Stealing Data with Corrupted Pretrained Models”

- “Profile Reconstruction from Private Sketches”

- “Membership Inference Attacks on Diffusion Models via Quantile Regression”

- “Techniques for Private Membership Inference in Different Learning Models”

- “Auditing Private Prediction”

- “Differentially Private Inference for Neural Networks without Training Data”

4. Novel Approaches in Federated and Privacy-Preserving Learning

- “COALA: A Practical and Vision-Centric Federated Learning Platform”

- “Shifted Interpolation for Differential Privacy”

- “MH-pFLID: Model Heterogeneous personalized Federated Learning via Injection and Distillation for Medical Data Analysis”

- “FairProof: Confidential and Certifiable Fairness for Neural Networks”

- “ViP: A Differentially Private Foundation Model for Computer Vision”

- “Dynamic Byzantine-Robust Learning: Adapting to Switching Byzantine Workers”

- “Byzantine-Resilient Federated Learning: Impact of Client Subsampling and Local Updates”

- “Beyond the Calibration Point: Mechanism Comparison in Differential Privacy”

- “FedSaC: Federated Similarity and Complementarity Learning”

- “Collaborative Differentially Private Personalization via Generative Data”

- “Differentially Private Sum-Product Networks”

- “Byzantine-Resilient Federated Learning with Serverless Aggregation”

5. Optimizations and Algorithms for Learning in Distributed Systems

- “Towards the Theory of Unsupervised Federated Learning: Non-asymptotic Analysis of Federated EM Algorithms”

- “Adaptive Group Personalization for Federated Mutual Transfer Learning”

- “Federated Representation Learning in the Under-Parameterized Regime”

- “Improved Bounds for Pure Private Agnostic Learning: Item-Level and User-Level Privacy”

- “Causally Motivated Personalized Federated Learning with Shortcut-Averse Information-Theoretic Regularization”

- “Decomposable Submodular Maximization in Federated Setting”

- “Sequential Decision-Making in Federated Machine Learning”

- “PrE-Text: Training Text-based Models Using Proximal Federated Evolution”

- “Improved Modeling of Federated Data Using Mixtures-of-Dirichlet-Multinomials”

Cluster id: 1

Innovative Methods in Vision and Language Models: Enhancing Robustness, Self-Supervised Learning, and Domain Adaptation

1. Multimodal Learning and Vision-Language Alignment

- “Sparse-to-dense Multimodal Image Registration via Multi-Task Learning”

- “Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model”

- “MLIP: Efficient Multi-Perspective Language-Image Pretraining with Exhaustive Data Utilization”

- “CrossGET: Cross-Guided Ensemble of Tokens for Accelerating Vision-Language Transformers”

- “STELLA: Continual Audio-Video Pre-training with SpatioTemporal Localized Alignment”

- “Libra: Building Decoupled Vision System on Large Language Models”

- “FlashST: A Simple and Universal Prompt-Tuning Framework for Traffic Prediction”

- “Language-Driven Cross-Modal Classifier for Zero-Shot Multi-Label Image Recognition”

- “VideoPoet: A Large Language Model for Zero-Shot Video Generation”

- “Video-LaVIT: Unified Video-Language Pre-training with Decoupled Visual-Motional Tokenization”

- “NExT-GPT: Any-to-Any Multimodal LLM”

2. Vision Transformers and Efficient Architectures

- “Enhancing Vision Transformer: Amplifying Non-Linearity in Feedforward Network Module”

- “Exploring Training on Heterogeneous Data with Mixture of Low-rank Adapters”

- “Visual Transformer with Differentiable Channel Selection: An Information Bottleneck Inspired Approach”

- “Sparse Model Inversion: Efficient Inversion of Vision Transformers for Data-Free Applications”

- “SCoRe: Submodular Combinatorial Representation Learning”

- “Representation Surgery for Multi-Task Model Merging”

- “Sparse-to-dense Multimodal Image Registration via Multi-Task Learning”

3. Self-Supervised Learning and Dataset Distillation

- “Matrix Information Theory for Self-Supervised Learning”

- “Sharpness-Aware Data Generation for Zero-shot Quantization”

- “Improving Interpretation Faithfulness for Vision Transformers”

- “Dissecting Multimodality in VideoQA Transformer Models by Impairing Modality Fusion”

- “Learning from Memory: Non-Parametric Memory Augmented Self-Supervised Learning of Visual Features”

- “Representation Surgery for Multi-Task Model Merging”

- “Autoencoding Conditional Neural Processes for Representation Learning”

- “One for All: A Universal Generator for Concept Unlearnability via Multi-Modal Alignment”

4. Image and Video Generation

- “Creative Text-to-Audio Generation via Synthesizer Programming”

- “Fast Text-to-3D-Aware Face Generation and Manipulation via Direct Cross-modal Mapping and Geometric Regularization”

- “Prompting4Debugging: Red-Teaming Text-to-Image Diffusion Models by Finding Problematic Prompts”

- “Memory Consolidation Enables Long-Context Video Understanding”

- “Compositional Text-to-Image Generation with Dense Blob Representations”

- “MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions”

- “Genie: Generative Interactive Environments”

- “Image Fusion via Vision-Language Model”

- “High-Order Contrastive Learning with Fine-grained Comparative Levels for Sparse Ordinal Tensor Completion”

5. Few-Shot Learning and Domain Adaptation

- “Gradual Divergence for Seamless Adaptation: A Novel Domain Incremental Learning Method”

- “Learning Domain-Invariant Temporal Dynamics for Few-Shot Action Recognition”

- “DMTG: One-Shot Differentiable Multi-Task Grouping”

- “Meta Evidential Transformer for Few-Shot Open-Set Recognition”

- “Improving Prototypical Visual Explanations with Reward Reweighing, Reselection, and Retraining”

- “Compositional Few-Shot Class-Incremental Learning”

- “One Meta-tuned Transformer is What You Need for Few-shot Learning”

6. Adversarial Robustness and Model Security

- “Revealing the Dark Secrets of Extremely Large Kernel ConvNets on Robustness”

- “Robust CLIP: Unsupervised Adversarial Fine-tuning of Vision Embeddings for Robust Large Vision-Language Models”

- “ON Mechanistic Knowledge Localization in Text-to-Image Generative Models”

- “Removing Spurious Concepts from Neural Network Representations via Joint Subspace Estimation”

- “Residual-Conditioned Optimal Transport: Towards Structure-Preserving Unpaired and Paired Image Restoration”

- “Sharpness-Aware Data Generation for Zero-shot Quantization”

- “Mapping the Multiverse of Latent Representations”

7. Explainability and Interpretability

- “Disentanglement Learning via Topology”

- “Gradient-based Visual Explanation for Transformer-based CLIP”

- “Visual-Text Cross Alignment: Refining the Similarity Score in Vision-Language Models”

- “From Vision to Audio and Beyond: A Unified Model for Audio-Visual Representation and Generation”

- “InterpreTabNet: Distilling Predictive Signals from Tabular Data by Salient Feature Interpretation”

- “Towards generalizable particle picking in Cryo-EM images by leveraging Masked AutoEncoders”

- “Understanding Retrieval-Augmented Task Adaptation for Vision-Language Models”

8. Medical Imaging and Bioinformatics

- “Scale-Free Image Keypoints Using Differentiable Persistent Homology”

- “Enhancing Single-Cell VAE Latent Space via Semi-Supervision”

- “Multimodal Prototyping for cancer survival prediction”

- “A Touch, Vision, and Language Dataset for Multimodal Alignment”

- “Mixed-Feature Selection in Histopathology Images”

- “SleepFM: Multi-modal Representation Learning for Sleep Across Brain Activity, ECG and Respiratory Signals”

- “PLUTO: Pathology-Universal Transformer”

- “X-Oscar: A Progressive Framework for High-quality Text-guided 3D Animatable Avatar Generation”

9. Image Quality and Compression

- “Residual Quantization with Implicit Neural Codebooks”

- “Compress Clean Signal from Noisy Raw Image: A Self-Supervised Approach”

- “OptoDex: Gap-Free Object Recognition Through Adversarial Erasers”

- “Hyperfields: Towards Zero-Shot Generation of NeRFs from Text”

- “Hyperbolic Active Learning for Semantic Segmentation under Domain Shift”

- “A Linear Time and Space Local Point Cloud Geometry Encoder via Vectorized Kernel Mixture”

10. Miscellaneous (Various Novel Methods and Applications)

- “Receptive Fields As Experts in Convolutional Neural Architectures”

- “Slicedit: Zero-Shot Video Editing With Text-to-Image Diffusion Models Using Spatio-Temporal Slices”

- “Bootstrap AutoEncoders With Contrastive Paradigm for Self-supervised Gaze Estimation”

- “IIANet: An Intra- and Inter-Modality Attention Network for Audio-Visual Speech Separation”

- “Mapping the Multiverse of Latent Representations”

- “Tell, Don’t Show: Language Guidance Eases Transfer Across Domains in Images and Videos”

- “EVMerge: Language-Aware Vision Merging Under Uncertainty”

Cluster id: 2

Advanced Techniques and Applications in Reinforcement Learning

1. Safe and Robust Reinforcement Learning

- “Run-Time Task Composition with Safety Semantics”

- “Iterative Regularized Policy Optimization with Imperfect Demonstrations”

- “Adaptive Horizon Actor-Critic for Policy Learning in Contact-Rich Differentiable Simulation”

- “Adaptive Advantage-Guided Policy Regularization for Offline Reinforcement Learning”

- “Constrained Reinforcement Learning Under Model Mismatch”

- “EfficientZero V2: Mastering Discrete and Continuous Control with Limited Data”

2. Exploration and Sample Efficiency

- “Rich-Observation Reinforcement Learning with Continuous Latent Dynamics”

- “How to Explore with Blindness: State Entropy Maximization in POMDPs”

- “Compound Returns Reduce Variance in Reinforcement Learning”

- “Exploration-Driven Policy Optimization in RLHF: Theoretical Insights on Efficient Data Utilization”

- “Single-Trajectory Distributionally Robust Reinforcement Learning”

- “Scalable Safe Policy Improvement for Factored Multi-Agent MDPs”

3. Learning Representations and Models

- “Learning Causal Dynamics Models in Object-Oriented Environments”

- “Foundation Policies with Hilbert Representations”

- “Simple Ingredients for Offline Reinforcement Learning”

- “Learning the Target Network in Function Space”

- “Skill-Enhanced Reinforcement Learning Acceleration from Demonstrations”

4. Multi-Agent Reinforcement Learning

- “Subequivariant Reinforcement Learning in 3D Multi-Entity Physical Environments”

- “FightLadder: A Benchmark for Competitive Multi-Agent Reinforcement Learning”

- “Impact of Decentralized Learning on Player Utilities in Stackelberg Games”

5. Interactive and Online Learning

- “Rapid Learning without Catastrophic Forgetting in the Morris Water Maze”

- “Agnostic Interactive Imitation Learning: New Theory and Practical Algorithms”

- “Learning with Adaptive Resource Allocation”

6. Imitation and Preference Learning

- “Discovering Multiple Solutions from a Single Task in Offline Reinforcement Learning”

- “Imitation Learning from Purified Demonstrations”

- “Iterative Preference Learning from Human Feedback: Bridging Theory and Practice for RLHF under KL-constraint”

- “Preference-Based Reinforcement Learning”

7. Deep Learning Approaches and Optimization

- “ReLU to the Rescue: Improve Your On-Policy Actor-Critic with Positive Advantages”

- “Learning Causal Dynamics Models in Object-Oriented Environments”

- “Multi-Agent Policy Learning with Evolutionary Strategies”

- “Bayesian Design Principles for Offline-to-Online Reinforcement Learning”

8. Policy Optimization and Generalization

- “Cross-Domain Policy Adaptation by Capturing Representation Mismatch”

- “Cross-Domain Offline Reinforcement Learning: Collaborative Single-Policy Coverage Suffices”

- “How to Leverage Diverse Demonstrations in Offline Imitation Learning”

9. Reward Function Design and Optimization

- “Augmenting Decision with Hypothesis in Reinforcement Learning”

- “Reward Warping for Robust Offline Reinforcement Learning”

10. Miscellaneous Challenges in Reinforcement Learning

- “Position: Rethinking Post-Hoc Search-Based Neural Approaches for Solving Large-Scale Traveling Salesman Problems”

- “Framework for Markov Decision Processes with Temporal Logic Specifications”

Cluster id: 3

Advanced Techniques and Theoretical Insights in Machine Learning Optimization and Related Algorithms

1. Differential Games and Game Theory

- “State-Constrained Zero-Sum Differential Games with One-Sided Information”

2. Compression and Data Efficiency

- “Debiased Distribution Compression”

3. Complexity and Generalization in Optimization

- “Information Complexity of Stochastic Convex Optimization: Applications to Generalization and Memorization”

4. Convergence and Gradient Methods

- “On the Last-Iterate Convergence of Shuffling Gradient Methods”

- “Accelerated Policy Gradient: On the Convergence Rates of the Nesterov Momentum for Reinforcement Learning”

- “Convergence of Some Convex Message Passing Algorithms to a Fixed Point”

5. Reinforcement Learning and Bandit Problems

- “Learning from Streaming Data when Users Choose”

- “Best Arm Identification for Stochastic Rising Bandits”

- “Leveraging (Biased) Information: Multi-armed Bandits with Offline Data”

6. Change Detection and Sequential Methods

- “Reducing sequential change detection to sequential estimation”

- “Sequential Kernel Goodness-of-fit Testing”

7. Bilevel and Hyperparameter Optimization

- “Distributed Bilevel Optimization with Communication Compression”

- “Optimal Hessian/Jacobian-Free Nonconvex-PL Bilevel Optimization”

8. Stochastic and Composite Optimization

- “MoMo: Momentum Models for Adaptive Learning Rates”

- “Optimal Kernel Quantile Learning with Random Features”

- “Efficient Stochastic Approximation of Minimax Excess Risk Optimization”

9. Sparse and High-Dimensional Problems

- “Weighted distance nearest neighbor condensing”

- “Improving Computational Complexity in Statistical Models with Local Curvature Information”

- “Sparse Dimensionality Reduction Revisited”

10. Matrix and Tensor Methods

- “MC-GTA: Metric-Constrained Model-Based Clustering using Goodness-of-fit Tests with Autocorrelations”

- “On the Error-Propagation of Inexact Hotelling’s Deflation for Principal Component Analysis”

- “Random matrix theory improved Frechet mean of symmetric positive definite matrices”

11. Bayesian Methods and Inference

- “Faster Sampling via Stochastic Gradient Proximal Sampler”

- “Stability and Generalization of Stochastic Compositional Gradient Descent Algorithms”

- “Efficient Algorithms for Empirical Group Distributional Robust Optimization and Beyond”

12. Kernel Methods and Gaussian Processes

- “Slicing with Stein Kernels for Faster Adaptive Monte Carlo”

- “KernelSHAP-IQ: Weighted Least Square Optimization for Shapley Interactions”

13. Graph Clustering and Network Optimization

- “Dynamic Metric Embedding into lp Space”

- “Faster Streaming and Scalable Algorithms for Finding Directed Dense Subgraphs in Large Graphs”

- “Efficient Low-Rank Matrix Estimation, Experimental Design, and Arm-Set-Dependent Low-Rank Bandits”

14. Theoretical and Practical Improvements in Machine Learning

- “Fair Resource Allocation in Multi-Task Learning”

- “Faster Adaptive Optimizers for Nonconvex Problems: From Theory to Practice”

- “Robust and Conjugate Gaussian Process Regression”

15. Quantum Algorithms and Applications

- “Quantum Algorithms and Lower Bounds for Finite-Sum Optimization”

Cluster id: 4

Best Practices and Methods in Aligning LLMs with Human Preferences and Ensuring Ethical and Safe AI Development

1. Improving Language Models with Guidance and Optimization Techniques

- “Stay on Topic with Classifier-Free Guidance”

- “Improving Factuality and Reasoning Language Models through Multiagent Debate”

- “Improving Open-Ended Text Generation via Adaptive Decoding”

- “Towards Efficient Exact Optimization of Language Model Alignment”

- “Automated Evaluation of Retrieval-Augmented Language Models with Task-Specific Exam Generation”

- “Revisiting Character-level Adversarial Attacks for Language Models”

- “Learning to Model the World With Language”

- “Promptbreeder: Self-Referential Self-Improvement via Prompt Evolution”

- “Optimizing watermarks for large language models”

2. Aligning Language Models with Human Preferences and Values

- “Position Paper: A Safe Harbor for AI Evaluation and Red Teaming”

- “Human Alignment of Large Language Models through Online Preference Optimisation”

- “Self-Rewarding Language Models”

- “Nash Learning from Human Feedback”

- “Position Paper: Building Guardrails for Large Language Models”

- “RigorLLM: Resilient Guardrails for Large Language Models against Undesired Content”

- “Exploring the LLM Journey from Cognition to Expression with Linear Representations”

3. Safety, Reliability, and Robustness in Large Language Models

- “PARDEN, Can You Repeat That? Defending against Jailbreaks via Repetition”

- “Position Paper: Quantifying Policy Impacts on Online Harms – A Call for Machine Learning-powered Assessment of the EU Digital Services Act”

- “Assessing the Brittleness of Safety Alignment via Pruning and Low-Rank Modifications”

- “Position Paper: Key Claims in LLM Research Have a Long Tail of Footnotes”

- “AI for software development at Google”

- “Transforming and Combining Rewards for Aligning Large Language Models”

4. Adaptive and In-Context Learning in Large Language Models

- “In-Context Unlearning: Language Models as Few-Shot Unlearners”

- “Is In-Context Learning in Large Language Models Bayesian? A Martingale Perspective”

- “ReMax: A Simple, Effective, and Efficient Reinforcement Learning Method for Aligning Large Language Models”

- “Learning and Forgetting Unsafe Examples in Large Language Models”

- “Understanding the Learning Dynamics of Alignment with Human Feedback”

- “Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision”

- “Neighboring Perturbations of Knowledge Editing on Large Language Models”

5. Enhancements and Innovations in Model Functionality and Coding

- “BRAIn: Bayesian Reward-conditioned Amortized Inference for natural language generation from feedback”

- ”$\texttt{MoE-RBench}$: Towards Building Reliable Language Models with Sparse Mixture-of-Experts”

- “Physics of Language Models: Part 3.1, Knowledge Storage and Extraction”

- “AutoOS: Make Your OS More Powerful by Exploiting Large Language Models”

6. Discovery and Research with Generative AI

- “LLM and Simulation as Bilevel Optimizers: A New Paradigm to Advance Physical Scientific Discovery”

- “BetterV: Controlled Verilog Generation with Discriminative Guidance”

- “MAX: Multimodal Extreme Networks for Dynamic Tasks”

- “RLFV: Learning from Verbal Feedback without Overgeneralization”

7. Multimodal and Specialized AI Applications

- “TravelPlanner: A Benchmark for Real-World Planning with Language Agents”

- Testing the limit of what current LLMs can do on the planning front. For now, limited. Provides a benchmark / sandbox to test LLMs planning capabilities.

- ”$\texttt{MoE-RBench}$: Towards Building Reliable Language Models with Sparse Mixture-of-Experts”

- “Keypoint-based Progressive Chain-of-Thought Distillation for LLMs”

- “Using Left and Right Brains Together: Towards Vision and Language Planning”

8. Model Interpretability and Transparency

- “Understanding Preference Fine-Tuning for Large Language Models”

- “Self-Exploring Language Models: Active Preference Elicitation for Online Alignment”

- “AI Control: Improving Safety Despite Intentional Subversion”

- “Neighboring Perturbations of Knowledge Editing on Large Language Models”

- “Feedback Loops With Language Models Drive In-Context Reward Hacking”

9. Task-Specific Performance and Capabilities of LLMs

- “TravelPlanner: A Benchmark for Real-World Planning with Language Agents”

- ”$\texttt{MoE-RBench}$: Towards Building Reliable Language Models with Sparse Mixture-of-Experts”

- “Physics of Language Models: Part 3.1, Knowledge Storage and Extraction”

- “Embodied CoT Distillation From LLM To Off-the-shelf Agents”

- “Vision-Language Models Provide Promptable Representations for Reinforcement Learning”

Cluster id: 5

Dynamics and Optimization in Neural Network Training

1. Optimization and Training Dynamics

- “Bias of Stochastic Gradient Descent or the Architecture: Disentangling the Effects of Overparameterization of Neural Networks”

- “SGD vs GD: Rank Deficiency in Linear Networks”

- “Critical feature learning in deep neural networks”

- “On the Implicit Bias of Adam”

- “Repetita Iuvant: Data Repetition Allows SGD to Learn High-Dimensional Multi-Index Functions”

- “Where Do Large Learning Rates Lead Us? A Feature Learning Perspective”

- “Gradient Descent with Polyak’s Momentum Finds Flatter Minima via Large Catapults”

- “The optimization landscape of Spectral neural network”

- “An Improved Finite-time Analysis of Temporal Difference Learning with Deep Neural Networks”

2. Neural Architecture and Generalization

- “Provable Multi-Task Representation Learning by Two-Layer ReLU Neural Networks”

- “Random matrix theory analysis of neural network weight matrices”

- “Expressivity of Neural Networks with Fixed Weights and Learned Biases”

- “Get rich quick: exact solutions reveal how unbalanced initializations promote rapid feature learning”

- “Position Paper: The No Free Lunch Theorem, Kolmogorov Complexity, and the Role of Inductive Biases in Machine Learning”

- “Deconstructing the Goldilocks Zone of Neural Network Initialization”

- “On a Neural Implementation of Brenier’s Polar Factorization”

- “Transformers Learn Nonlinear Features In Context: Nonconvex Mean-field Dynamics on the Attention Landscape”

3. Regularization and Robustness

- “SGD vs GD: Rank Deficiency in Linear Networks”

- “On the Implicit Bias of Adam”

- “Hidden Traveling Waves bind Working Memory Variables in Recurrent Neural Networks”

- “On the Diminishing Returns of Width for Continual Learning”

- “Can Implicit Bias Imply Adversarial Robustness?”

- “Loss landscape geometry reveals stagewise development of transformers”

- “Invariant Representations of Neural Networks via Differential Regularization”

4. Neural Network Implementation and Architecture Search

- “Expressivity of Neural Networks with Fixed Weights and Learned Biases”

- “Adaptive Gradient Regularization”

- “Provable Multi-Task Representation Learning by Two-Layer ReLU Neural Networks”

- “Fully Asynchronous CNNs: New Architecture and Implementation”

- “Proxy Constraints for Improved Neural Architecture Search”

- “Data-free Neural Representation Compression with Riemannian Neural Dynamics”

5. Neuro-Symbolic AI and Neuroscience Inspired Networks

- “Neural network learns low-dimensional polynomials with SGD near the information-theoretic limit”

- “Neural Symmetry Detection for Learning Neural Network Constraints”

- “Progress Measures for Grokking on Real-world Tasks”

- “Neural Tangent Kernels for Axis-Aligned Tree Ensembles”

- “Synaptic Plasticity for Dynamic Link Weight Adjustment”

6. Spiking Neural Networks and Neuroscience Models

- “Sparsest Models Elude Pruning: An Exposé of Pruning’s Current Capabilities”

- “Decoupling the Interaction of Spiking Neurons for Improved Learning Dynamics”

- “SNNs in High-Dimensional Systems: New Approaches and Empirical Studies”

- “Adaptive Spike-Timing Dependent Plasticity”

- “Neuromorphic Learning Algorithms: A Comprehensive Survey”

7. Physics-Informed Neural Networks and Scientific Applications

- “Data-free Neural Representation Compression with Riemannian Neural Dynamics”

- “New Insights into Neural Network Feature Space”

- “Challenges in Training PINNs: A Loss Landscape Perspective”

- “On the metastability of learning algorithms in physics-informed neural networks: A case study on Schrödinger operators”

- “Physics-Informed Neural Representations: From Theory to Practice”

8. Uncertainty and Bayesian Methods

- “Amortized Variational Deep Kernel Learning”

- “Bayesian Adaptation of Network Depth and Width for Continual Learning”

- “Beyond Implicit Bias: The Insignificance of SGD Noise in Online Learning”

- “Neural Estimation of Mutual Information without Test-Time Optimization”

9. High-Dimensional Data and Representation Learning

- “Unsupervised Feature Learning for High-Dimensional Data”

- “Invariant Feature Learning with Nonlinear Projections”

- “Deconstructing the Goldilocks Zone of Neural Network Initialization”

- “Learning Representations and Associations with Neural Networks”

- “Neural Compression Algorithms: A New Frontier in Data Processing”

Cluster id: 6

Advanced Graph-Based Machine Learning Techniques and Applications

1. Graph Neural Networks (GNN) and Enhancements

- “Enhancing Size Generalization in Graph Neural Networks through Disentangled Representation Learning”

- “Multi-Track Message Passing: Tackling Oversmoothing and Oversquashing in Graph Learning via Preventing Heterophily Mixing”

- “EquiPocket: an E(3)-Equivariant Geometric Graph Neural Network for Ligand Binding Site Prediction”

- “On the Role of Edge Dependency in Graph Generative Models”

- “Networked Inequality: Preferential Attachment Bias in Graph Neural Network Link Prediction”

- “SLOG: An Inductive Spectral Graph Neural Network Beyond Polynomial Filter”

- “Finding Paths by Graph Neural Network in Homogeneous and Heterogeneous Graphs”

- “Learning Graph Representation via Graph Entropy Maximization”

- “PANDA: Expanded Width-Aware Message Passing Beyond Rewiring”

- “Sign is Not a Remedy: Multiset-to-Multiset Message Passing for Learning on Heterophilic Graphs”

- “Pairwise Alignment Improves Graph Domain Adaptation”

2. Graph Representation, Encoding, and Learning

- “Geometric Algebra based encoding for graph prompting”

- “Graph2Token: Make LLMs Understand Molecule Graphs”

- “Quantum Positional Encodings for Graph Neural Networks”

- “Generalized Sobolev Transport for Probability Measures on a Graph”

- “Graph Positional and Structural Encoder”

- “Relational Deep Learning: Graph Representation Learning on Relational Databases”

- “Interactome-scale comparison of co-immunoprecipitation and yeast two-hybrid assays for protein interaction prediction”

- “Geometric Algebra based encoding for graph prompting”

- “Multi-View Stochastic Block Models”

- “Graph Adversarial Diffusion Convolution”

3. Diffusion Models for Graphs

- “Hyperbolic Geometric Latent Diffusion Model for Graph Generation”

- “Graph Diffusion Models and Applications to Semi-Supervised Learning”

4. Knowledge Graphs, Causality, and Causal Learning

- “Generalization Error of Graph Neural Networks in the Mean-field Regime”

- “Causal Reasoning in Graphs: Aligning with Neural Tangent Kernels”

- “Scalable and Flexible Causal Discovery with an Efficient Test for Adjacency”

- “Generalizing Knowledge Graph Embedding with Universal Orthogonal Parameterization”

5. Applications in Biology and Chemistry

- “Predicting and Interpreting Energy Barriers of Metallic Glasses with Graph Neural Networks”

- “A Space Group Symmetry Informed Network for O(3) Equivariant Crystal Tensor Prediction”

- “Injecting Hierarchical Biological Priors into Graph Neural Networks for Flow Cytometry Prediction”

6. Graph Transformers

- “Graph External Attention Enhanced Transformer”

- “Aligning Transformers with Weisfeiler-Leman”

- “Less is More: on the Over-Globalizing Problem in Graph Transformers”

- “Triplet Interaction Improves Graph Transformers: Accurate Molecular Graph Learning with Triplet Graph Transformers”

- “Position: Graph Foundation Models Are Already Here”

- “Weisfeiler Leman for Euclidean Equivariant Machine Learning”

- “Homomorphism Counts for Graph Neural Networks: All About That Basis”

- “A Graph is Worth $K$ Words: Euclideanizing Graph using Pure Transformer”

- “Diffusing Knowledge in Graph Transformers”

7. Explainability and Robustness

- “Encoding to Explain: Enhancing Explainable Machine Learning for Graphs”

- “How Interpretable Are Interpretable Graph Neural Networks?”

- “Graph Neural Networks Use Graphs When They Shouldn’t”

- “Graph Distillation with Eigenbasis Matching”

- “Exploring Correlations of Self-Supervised Tasks for Graphs”

- “Explaining Graph Neural Networks via Structure-aware Interaction Index”

8. Unsupervised and Self-supervised Learning

- “Unsupervised Episode Generation for Graph Meta-learning”

- “Disentangled Graph Self-supervised Learning under Distribution Shifts”

- “From Coarse to Fine: Enable Comprehensive Graph Self-supervised Learning with Multi-granular Semantic Ensemble”

9. Graph Data Handling, Augmentation, and Mixup

- “Graph Data Augmentation and Mixup Techniques for Improved Model Performance”

- “Perfect Alignment May be Poisonous to Graph Contrastive Learning”

- “Augmentation and Mixup for Enhancing Graph Learning Techniques”

10. Advanced Topics in Graph Theory and Learning

- “Editors, Readers, Writers: Graph Neural Network-based Text Editors”

- “Incremental Topological Ordering and Cycle Detection with Predictions”

- “Neural Tangent Kernels Motivate Cross-Covariance Graphs in Neural Networks”

- “Unraveling the Impact of Heterophilic Structures on Graph Positive-Unlabeled Learning”

Cluster id: 7

Advanced Generative Models and Methods in Machine Learning

1. Diffusion Models & Generative Methods

- “Diffusion Language Models Are Versatile Protein Learners”

- “Training-Free Inference Acceleration of Diffusion Models”

- “Hidden Learning Dynamics of Capability before Behavior in Diffusion Models”

- “Reflected Flow Matching”

- “Diffusion Models Encode the Intrinsic Dimension of Data Manifolds”

- “Diffusion Tempering Improves Parameter Estimation with Probabilistic Integrators for Ordinary Differential Equations”

- “Efficient Mixture Learning in Black-Box Variational Inference”

- “Enhancing Implicit Shape Generators Using Topological Regularizations”

- “Particle Denoising Diffusion Sampler”

- “Confronting Reward Overoptimization for Diffusion Models: A Perspective of Inductive and Primacy Biases”

- “Enhancing Trajectory Prediction through Self-Supervised Waypoint Distortion Prediction”

- “Switched Flow Matching: Eliminating Singularities via Switching ODEs”

- “Fast Timing-Conditioned Latent Audio Diffusion”

- “Switched Flow Matching: Eliminating Singularities via Switching ODEs”

2. Protein Design and Simulation

- “AlphaFold Meets Flow Matching for Generating Protein Ensembles”

- “Floating Anchor Diffusion Model for Multi-motif Scaffolding”

- “Sequence-Specific Folding by Stochastic Diffusions”

- “Generative Diffusion Networks for RNA Design”

- “Protein Conformation Generation via Diffusion Processes”

- “Tutorial Design and Verification for Protein Folding Models”

- “Diffusion-Based Methods for Protein Design”

- “Topological Regularizations in Diffusion Models for Shape Generations”

- “Leverage Denoising Diffusion Model for Training Stability”

3. Conditional Generative Models

- “Dynamic Multi-Resolution Denoising Models for Inference”

- “Retrieval-Augmented Diffusion for 3D Molecule Generation”

- “Conditional Sampling with Discrete Diffusion Models”

- “Model-Free Adaptive Control with Denoising Diffusion”

- “From Fourier to Neural ODEs: Flow Matching for Modeling Complex Systems”

4. Image and Video Generation & Editing

- “MagicPose: Realistic Human Pose and Facial Expression Retargeting with Identity-aware Diffusion”

- “Single-Model Attribution of Generative Models Through Final-Layer Inversion”

- “Compositional Image Decomposition with Diffusion Models”

- “Conditional Normalizing Flows for Active Learning of Coarse-Grained Molecular Representations”

- “Multi-Region Markovian Gaussian Process: An Efficient Method to Discover Directional Communications Across Multiple Brain Regions”

- “Gaussian Pro: 3D Gaussian Splatting with Progressive Propagation”

5. Structure-based Drug and Molecular Design

- “Drug Discovery with Dynamic Goal-aware Fragments”

- “Molecular Design Optimization Using Energy-Based Models”

- “Structure-based drug design by denoising voxel grids”

- “Rethinking Molecular Design: Integrating Latent Variable and Auto-Regressive Models for Enhanced Goal Directed Generation”

- “Generative Flows on Discrete State-Spaces: Enabling Multimodal Flows with Applications to Protein Co-Design”

6. Scientific Applications & Physical Systems Modeling

- “OxyGenerator: Reconstructing Global Ocean Deoxygenation Over a Century with Deep Learning”

- “Physical Systems Simulation Using Deep Learning”

- “Neural Jump-Diffusion Temporal Point Processes”

- “Robotics and Dynamics Systems”

- “Data-efficient Algorithms for Scientific Simulation”

7. Machine Learning for Optimization & Inference

- “Robust Classification via a Single Diffusion Model”

- “Improved Variational Inference via Denoising Diffusion”

- “Mean-field Underdamped Langevin Dynamics and its Spacetime Discretization”

- “Bayesian Power Steering: An Effective Approach for Domain Adaptation of Diffusion Models”

- “Adaptive Sampling of k-Space in Magnetic Resonance for Fast Pathology Prediction”

8. Audio and Music Generation

- “Zero-Shot Unsupervised and Text-Based Audio Editing Using DDPM Inversion”

- “Time Series Diffusion in the Frequency Domain”

- “Neural Diffusion Models for Long-form Music Generation”

- “Latent Audio Diffusion Models”

- “MusicFlow: Cascaded Flow Matching for Text Guided Music Generation”

Cluster id: 8

Efficient Training and Inference for Large Language Models

1. Efficient and Scalable LLM Inference and Training

- “Accelerated Speculative Sampling Based on Tree Monte Carlo”

- “CaM: Cache Merging for Memory-efficient LLMs Inference”

- “DéjàVu: KV-cache Streaming for Fast, Fault-tolerant Generative LLM Serving”

- “Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference”

- “Fewer Truncations Improve Language Modeling”

- “GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection”

- “Getting the most out of your tokenizer for pre-training and domain adaptation”

- “HexGen: Generative Inference of Large Language Model over Heterogeneous Environment”

- “Learning Linear Block Error Correction Codes”

- “Scaling Beyond the GPU Memory Limit for Large Mixture-of-Experts Model Training”

- “Trainable Transformer in Transformer”

- “Tandem Transformers for Inference Efficient LLMs”

- “Transformers with Loss Shaping Constraints for Long-Term Time Series Forecasting”

- “Variance-reduced Zeroth-Order Methods for Fine-Tuning Language Models”

- “Wukong: Towards a Scaling Law for Large-Scale Recommendation”

2. Transformer Architectures and Enhancements

- “Better & Faster Large Language Models via Multi-token Prediction”

- “Bifurcated Attention for Single-Context Large-Batch Sampling”

- “CLLMs: Consistency Large Language Models”

- “Compositional Capabilities of Autoregressive Transformers: A Study on Synthetic, Interpretable Tasks”

- “From Generalization Analysis to Optimization Designs for State Space Models”

- “Improving Transformers with Dynamically Composable Multi-Head Attention”

- “LeaPformer: Enabling Linear Transformers for Autoregressive and Simultaneous Tasks via Learned Proportions”

- “On the Embedding Collapse when Scaling up Recommendation Models”

- “Repeat After Me: Transformers are Better than State Space Models at Copying”

- “SLAB: Efficient Transformers with Simplified Linear Attention and Progressive Re-parameterized BatchNorm”

- “State-Free Inference of State-Space Models: The Transfer Function Approach”

3. Quantization and Compression Techniques

- “Accurate LoRA-Finetuning Quantization of LLMs via Information Retention”

- “BiLLM: Pushing the Limit of Post-Training Quantization for LLMs”

- “Extreme Compression of Large Language Models via Additive Quantization”

- “Inference in Memory: Co-locating INference and Far-memory Efficiently”

- “LQER: Low-Rank Quantization Error Reconstruction for LLMs”

- “Outlier-aware Slicing for Post-Training Quantization in Vision Transformer”

- “QuIP#: Even Better LLM Quantization with Hadamard Incoherence and Lattice Codebooks”

- “Rethinking Optimization and Architecture for Tiny Language Models”

- “SAMformer: Unlocking the Potential of Transformers in Time Series Forecasting with Sharpness-Aware Minimization and Channel-Wise Attention”

4. Parameter-Efficient Fine-Tuning and Adaptation

- “DoRA: Weight-Decomposed Low-Rank Adaptation”

- “Efficient World Models with Time-Aware and Context-Augmented Tokenization”

- “Flextron: Many-in-One Flexible Large Language Model”

- “LoRA+: Efficient Low Rank Adaptation of Large Models”

- “Parameter Efficient Quasi-Orthogonal Fine-Tuning via Givens Rotation”

- “PEFT: Parameter-Efficient Fine-Tuning with Increased Robustness”

- “Pruning Small Pre-Trained Weights $\textit{Irreversibly}$ and $\textit{Monotonically}$ Impairs “Difficult” Downstream Tasks in LLMs”

- “SparseTSF: Modeling Long-term Time Series Forecasting with 1k Parameters”

5. Algorithms for Language and Sequence Tasks

- “A Fast Tree Search Procedure for Language Models”

- “Algorithm and Hardness for Dynamic Attention Maintenance in Large Language Models”

- “Benchmarking and Building Long-Context Retrieval Models with LoCo and M2-BERT”

- “Cell2Sentence: Teaching Large Language Models the Language of Biology”

- “Improving Token-Based World Models with Parallel Observation Prediction”

- “Learning Solution-Aware Transformers for Efficiently Solving Quadratic Assignment Problem”

- “Memory Efficient Neural Processes via Constant Memory Attention Block”

- “NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models”

- “Sparse is Enough in Fine-tuning Pre-trained Large Language Models”

6. Novel Training and Learning Paradigms

- “Adapted Training of Large-Scale Non-parallel Translation Models for e-LTE License Test Extraction”

- “Amortizing Pragmatic Program Synthesis with Rankings”

- “Bayesian Program Learning by Decompiling Amortized Knowledge”

- “Bidirectional Equivariant Long-Range DNA Sequence Modeling”

- “DeepCortex: Deep Learning Training on Low-Memory Devices through Artificial Intelligence”

- “Language Models are Super Mario: Absorbing Abilities from Homologous Models as a Free Lunch”

- “Learning from Students: Applying t-Distributions to Explore Accurate and Efficient Formats for LLMs”

- “Practical Performance Guarantees for Pipelined DNN Inference”

- “Pre-Training Protein Bi-level Representation Through Span Mask Strategy On 3D Protein Chains”

7. Efficient Computation Techniques in Various Domains

- “Auto-Regressive Next-Token Predictors are Universal Learners”

- “Auctionformer: A Unified Deep Learning Algorithm for Solving Equilibrium Strategies in Auction Games”

- “CARTE: Pretraining and Transfer for Tabular Learning”

- “Differentiable Model Scaling using Differentiable Topk”

- “Efficient and Effective Time-Series Forecasting with Spiking Neural Networks”

- “ELF: Encoding Speaker-Specific Latent Speech Features for Speech Synthesis”

- “Hierarchical State Space Models for Continuous Sequence-to-Sequence Modeling”

- “How Do Nonlinear Transformers Learn and Generalize in In-Context Learning?”

- “PIDformer: Transformer Meets Control Theory”

- “Sparse-IFT: Sparse Iso-FLOP Transformations for Maximizing Training Efficiency”

Cluster id: 9

Advanced Techniques and Theoretical Insights in Machine Learning

1. Data Augmentation and Domain Adaptation

- “The good, the bad and the ugly sides of data augmentation: An implicit spectral regularization perspective”

- “Robustness of Deep Learning for Accelerated MRI: Benefits of Diverse Training Data”

- “Adaptive Robust Learning using Latent Bernoulli Variables”

- “Scaling Laws for the Value of Individual Data Points in Machine Learning”

2. Causal Inference and Treatment Effects

- “Can a Few Decide for Many? The Metric Distortion of Sortition”

- “Individual Treatment Effect Estimation with Confounding Adjustment”

- “Adaptive Learning in Personalized Treatments and Policies”

- “Inferring the Long-Term Causal Effects of Long-Term Treatments from Short-Term Experiments”

3. Fairness and Ethics in Machine Learning

- “Fair Off-Policy Learning from Observational Data”

- “Attribution-based Explanations that Provide Recourse Cannot be Robust”

- “The Relative Value of Prediction in Algorithmic Decision Making”

- “Fair Data Representation for Machine Learning at the Pareto Frontier”

4. Adversarial Examples and Robustness

- “Adversarially Robust Deep Multi-View Clustering”

- “Robust Universal Adversarial Perturbations”

- “Efficient Online Set-valued Classification with Bandit Feedback”

- “Two Heads are Actually Better than One: Towards Better Adversarial Robustness via Transduction and Rejection”

5. Explainability and Interpretability

- “TimeX++: Learning Time-Series Explanations with Information Bottleneck”

- “Explaining Temporal Black-Box Models via Functional Decomposition”

- “Manifold Integrated Gradients: Riemannian Geometry for Feature Attribution”

- “Counterfactual Explanations for Structured Prediction”

6. Machine Learning Theory

- “Provable Benefit of Cutout and CutMix for Feature Learning”

- “Interplay of ROC and Precision-Recall AUCs: Theoretical Limits and Practical Implications”

- “Theoretical Analysis of Learned Database Operations under Distribution Shift through Distribution Learnability”

- “On the sample complexity of conditional independence testing with Von Mises estimator with application to causal discovery”

7. Time-Series and Sequential Data

- “Dynamic Survival Analysis with Controlled Latent States”

- “Generalization Analysis of Learned Database Operations under Distribution Shift through Distribution Learnability”

- “Meta-Learners for Partially-Experimental Treatment Effect Estimation”

- “Time-Series Forecasting for Out-of-Distribution Generalization Using Invariant Learning”

8. Optimization and Learning Algorithms

- “Conformal Prediction for Multi-dimensional Time Series by Ellipsoidal Sets”

- “Kernel Debiased Plug-in Estimation”

- “Adaptive Constraint Modification via Grouping and Selection for Hardness-Preserving MILP Instance Generation”

- “Optimal Kernel Choice for Score Function-based Causal Discovery”

9. Uncertainty Quantification and Calibration

- “Uncertainty Estimation by Density Aware Evidential Deep Learning”

- “Robust Universal Adversarial Perturbations”

- “T-Cal: An Optimal Test for the Calibration of Predictive Models”

- “Pseudo-Calibration: Improving Predictive Uncertainty Estimation in Unsupervised Domain Adaptation”

10. Clustering and Anomaly Detection

- “ODIM: Outlier Detection via Likelihood of Under-Fitted Generative Models”

- “Scaling Laws for the Value of Individual Data Points in Machine Learning”

- “Active Statistical Inference”

- “Robust Universal Adversarial Perturbations”

11. Data Selection and Labeling

- “Refined Coreset Selection: Towards Minimal Coreset Size under Model Performance Constraints”

- “Longitudinal Targeted Minimum Loss-based Estimation with Temporal-Difference Heterogeneous Transformer”

- “Careful with that Scalpel: Improving Gradient Surgery with an EMA”

- “Doubly Robust Causal Effect Estimation under Networked Interference via Targeted Learning”

12. Deep Learning Methods

- “Improving Robustness to Multiple Spurious Correlations by Multi-Objective Optimization”

- “Robust Universal Adversarial Perturbations”

- “Meta-Learners for Partially-Identified Treatment Effects Across Multiple Environments”

- “Generalized Feature Attribution for Large Models & Data”

13. Bayesian Learning

- “Bayesian Structural Causal Models for High-dimensional Causal Inference”

- “Think Big: Estimating the Long-Term Effects of Large-Scale Policies with Bayesian Models”

- “Bayesian Uncertainty for Gradient Aggregation in Multi-task Learning”

- “Bayesian Neural Network Adaptation with Integrative Covariance Estimation”

14. Image and Vision

- “Sensitivity Sampling in Pixel-wise Regression Learning”

- “Pivot: Architecture-Specific Adaptations in Image-based Tasks”

- “Trained Random Forests Completely Reveal your Dataset”

- “Efficient Precision and Recall Metrics for Assessing Generative Models using Hubness-aware Sampling”

15. Semi-Supervised and Unsupervised Learning

- “SSL4Q: Semi-Supervised Learning of Quantum Data with Application to Quantum State Classification”

- “Active Adaptive Experimental Design for Treatment Effect Estimation with Covariate Choice”

- “Learning Variable Latent Layers in Semi-supervised Neural Networks”

- “Non-linear Gaussian Processes for Unsupervised Generalizations”